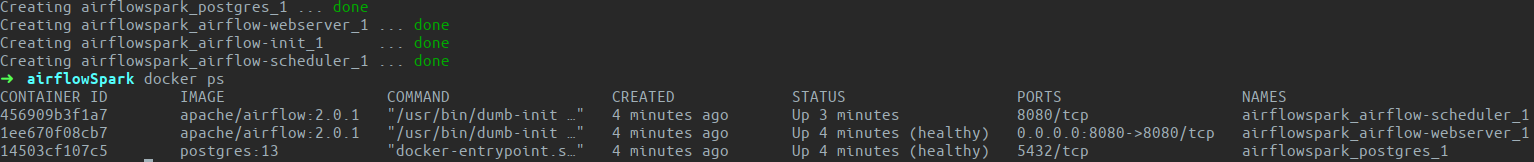

Manage configuration, which is time-consuming and can be set in various ways. Failed tasks are displayed in red on the graph and tree view of the Airflow UI, and the workflow restarts from the failure point.

Airflow Webserver-Airflow Webserver (HTTP Server) is a web interface used to visualize and monitor DAG task runs and results and to debug their behavior.Airflow Workers-Airflow workers pick up tasks from the queue and execute them, checking/retrieving results and passing them to Airflow’s metastore.Checks if dependencies are completed and adds tasks to the execution queue. Schedule DAG Intervals-Schedule intervals and parameters (param) to determine when the pipeline is run (triggered) for each DAG’s execution.The executor registers the task state changes and handles task execution. Airflow Executor-The task executor handles running tasks inside the scheduler as a default option or pushes task execution to Airflow workers.The scheduler is set to run at specific times or discrete intervals and submits tasks to the executors. Airflow Scheduler-The scheduler checks and extracts (parses) tasks and dependencies from the files and determines task instances and set of conditions.Define Pipeline Structure-Define DAG of tasks and dependencies coded in Python in a DAG file, creating workflows and DAG structure that allows executing operations.Write, schedule, iterate, and monitor data pipelines (workflows) and tasks with Krasamo’s Apache Airflow Consulting Services. Allows backfilling-can perform historical runs or reprocess data.Easy to combine with tools for expanded capabilities.Allows monitoring and visualizing of pipeline runs.Allows incremental processing and easy re-computation.Integrates with cloud services and databases.Creates complex and efficient pipelines.Benefits of Using Apache Airflow Technology

#Airflow etl directory code#

The dashboard provides several ways to visualize and manage the DAG runs and tasks instance actions, dependencies, schedule runs, states, code viewing, etc.

The Airflow UI provides features and visualizations (workflow status) that give insights for understanding the pipeline and the DAG runs. Hooks integrate with connections with encrypted credentials and API tokens stored as metadata. They can create instances in Airflow environments to connect quickly with APIs and databases using hooks (interfaces), without writing low-level code. Each task is scheduled or triggered after an upstream task is completed and implemented as a Python script-defined in DAG files that contain metadata about its execution.Īirflow solutions allow users the ability to run thousands of tasks and have many plugins and built-in operators available that integrate jobs in external systems or are customized for specific use cases.Īpache Airflow solutions are used for batch data pipelines. The DAG is the center that connects all the Airflow operators and lets you express dependencies between different stages in the pipeline. Tasks are represented as nodes and have upstream and downstream dependencies that are declared to create the DAG structure. The DAG defines the tasks and executions, running order, and frequency. A Directed Acrylic Graph (DAG) is a graph coded in Python that represent the overall pipeline with a clear execution path-and without loops or circular dependencies. Data pipelines involve the process of executing tasks in a specific order.Īpache Airflow is designed to express ETL pipelines as code and represent tasks as graphs that run with defined relationships and dependencies. What is Apache Airflow?Īpache Airflow is an open-source data pipeline orchestration platform (workflow scheduler).Īpache Airflow orchestrates components for processing data in data pipelines across distributed systems.

Integrate data from internal and external sources connecting to third-party APIs and data stores in a modular architecture. Airflow Operators and Sensors Airflow Hooksīuild and run ETL pipelines as code with the Apache Airflow orchestrator.Apache Airflow ETL Pipelines andMachine Learning Concepts.Benefits of Using Apache Airflow Technology.

0 kommentar(er)

0 kommentar(er)